Motion Detection in WLANs using PHY Layer Information

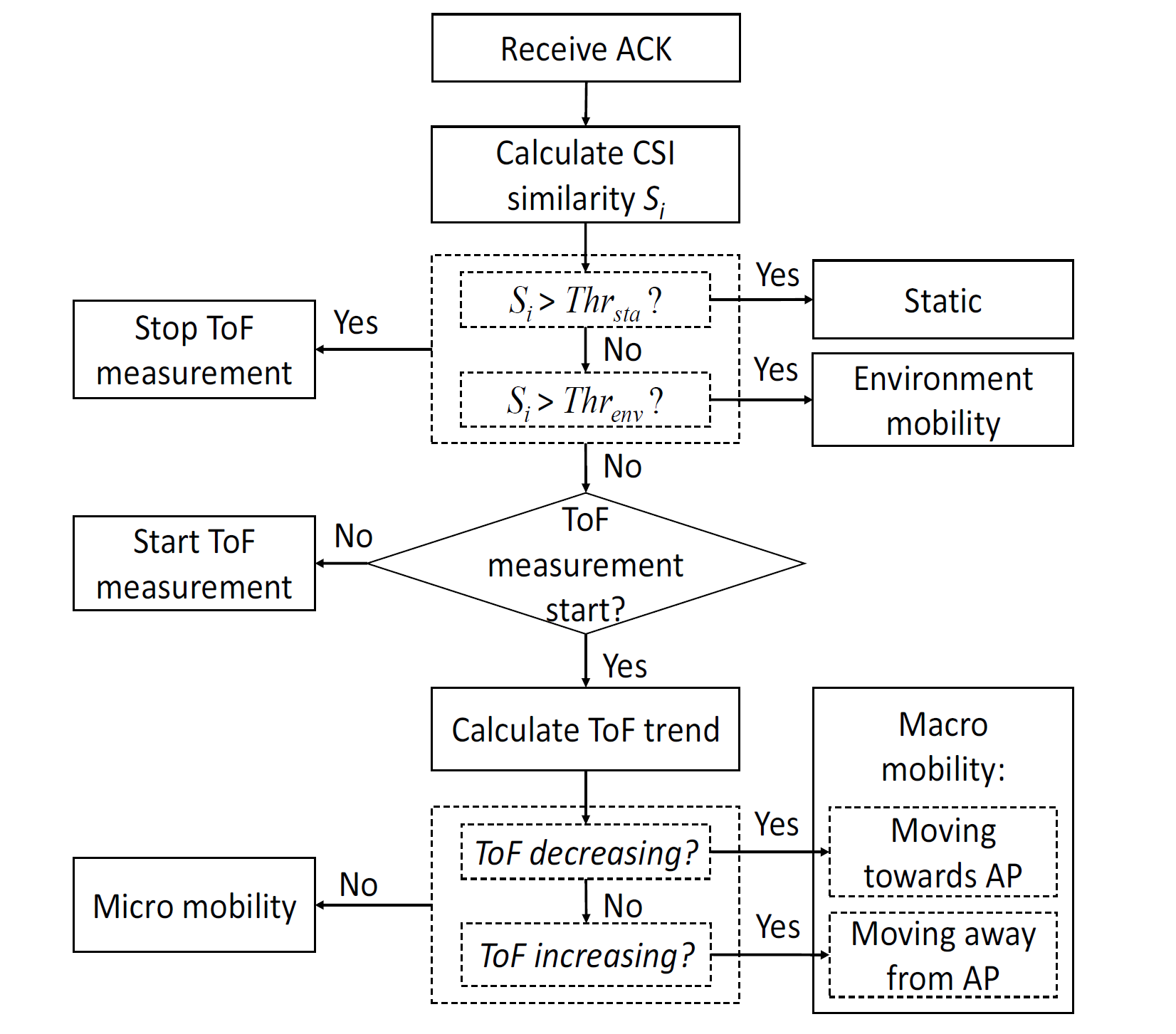

In this project, we demonstrate that it

is possible to enable fine-grained human motion detection on commodity

WiFi devices by exploiting PHY layer information available from today's WiFi chipsets.

In the first part of this project, we demonstrate

how PHY layer information -- Channel State Information (CSI) and Time-of-Flight (ToF) values -- available from commodity APs can be leveraged to detect and classify different client mobility modes

without any software modifications on the client side. We identify four broad categories of client mobility: static clients, environmental mobility (the client is static but

the channel changes due to external movements), micro-mobility (the client's location is confined within a small area), and macro-mobility (the client moves towards or away from the AP).

We show that the temporal changes in the CSI can be used to detect

static, environmental, and device mobility. However the changes in CSI

are similar for micro- and macro-mobility scenarios.

To distinguish between these two mobility modes,

we observe that the client's distance from the AP, which which is reflected in the ToF, changes significantly

under macro-mobility. The

increasing vs. decreasing trend of the client's distance can

further indicate its relative heading with respect to the AP.

In addition, we demonstrate how fine-grained mobility classification

can improve the performance of client

roaming, rate control, frame aggregation, and MIMO beamforming.

Our testbed experiments show that our mobility

classification algorithm achieves more than 92% accuracy in

a variety of scenarios, and the combined throughput gain of

all four mobility-aware protocols over their mobility-oblivious

counterparts can be more than 100%.

|

|

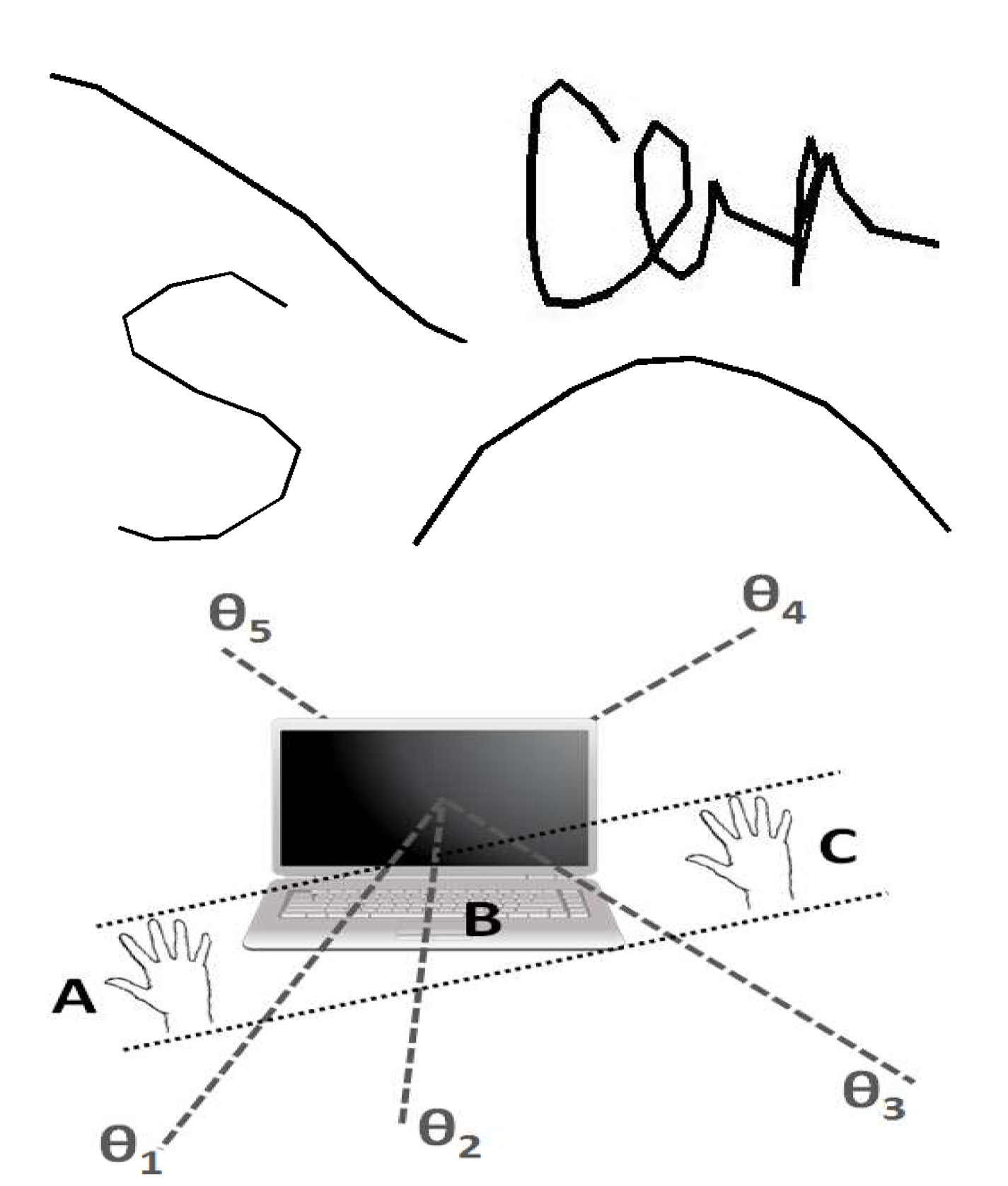

In the second part of the project, we introduce WiDraw, the first hand motion

tracking solution leveraging wireless signals that can be enabled on existing mobile devices

using only a software patch, without requiring prior learning or the use of any wearable. WiDraw

utilizes the presence of a large number of WiFi devices in

today's WLANs. It leverages the Angle-of-Arrival (AoA) values of

incoming wireless signals at the mobile device to track the detailed

trajectory of the user's hand in both Line-of-Sight and Non-Line-of-Sight scenarios.

The intuition behind WiDraw is that whenever the user's hand blocks

a signal coming from a certain direction, the signal strength of

the AoA representing the same direction will experience a sharp

drop. Therefore, by tracking the signal

strength of the AoAs, it is feasible to determine when and where

such occlusions happen and further determine a set of horizontal and

vertical coordinates along the hand's trajectory, given a depth value.

The depth of the user's hand can also be approximated using the drop

in the overall signal strength. By estimating the hand's depth, along

with horizontal and vertical coordinates, WiDraw is able to track the

user's hand in the 3-D space w.r.t the WiFi antennas of the receiver.

We demonstrate the feasibility of WiDraw by building a software prototype on HP Envy laptops, using Atheros AR9590 chipsets and 3 antennas. We show that by utilizing the AoAs from up to 25 WiFi transmitters, a WiDraw-enabled laptop can track the user's hand with a median error lower than 5 cm. WiDraw's rate of false positives -- motion detection in the absence of one -- is less than 0.04 events per minute over a 360 minute period in a busy office environment. Experiments across 10 different users also demonstrate that WiDraw can be used to write words and sentences in the air, achieving a mean word recognition accuracy of 91%.

People

Faculty

Students

External Collaborators

Publications

Funding

Li Sun worked at HP Labs as an intern for the duration of the project.