There are Ghosts in your Machine. Cybersecurity Researcher can Make Self-driving Cars Hallucinate

ECE/Khoury Professor Kevin Fu is researching methods to prevent cyberattacks in self-driving cars caused by optical illusions.

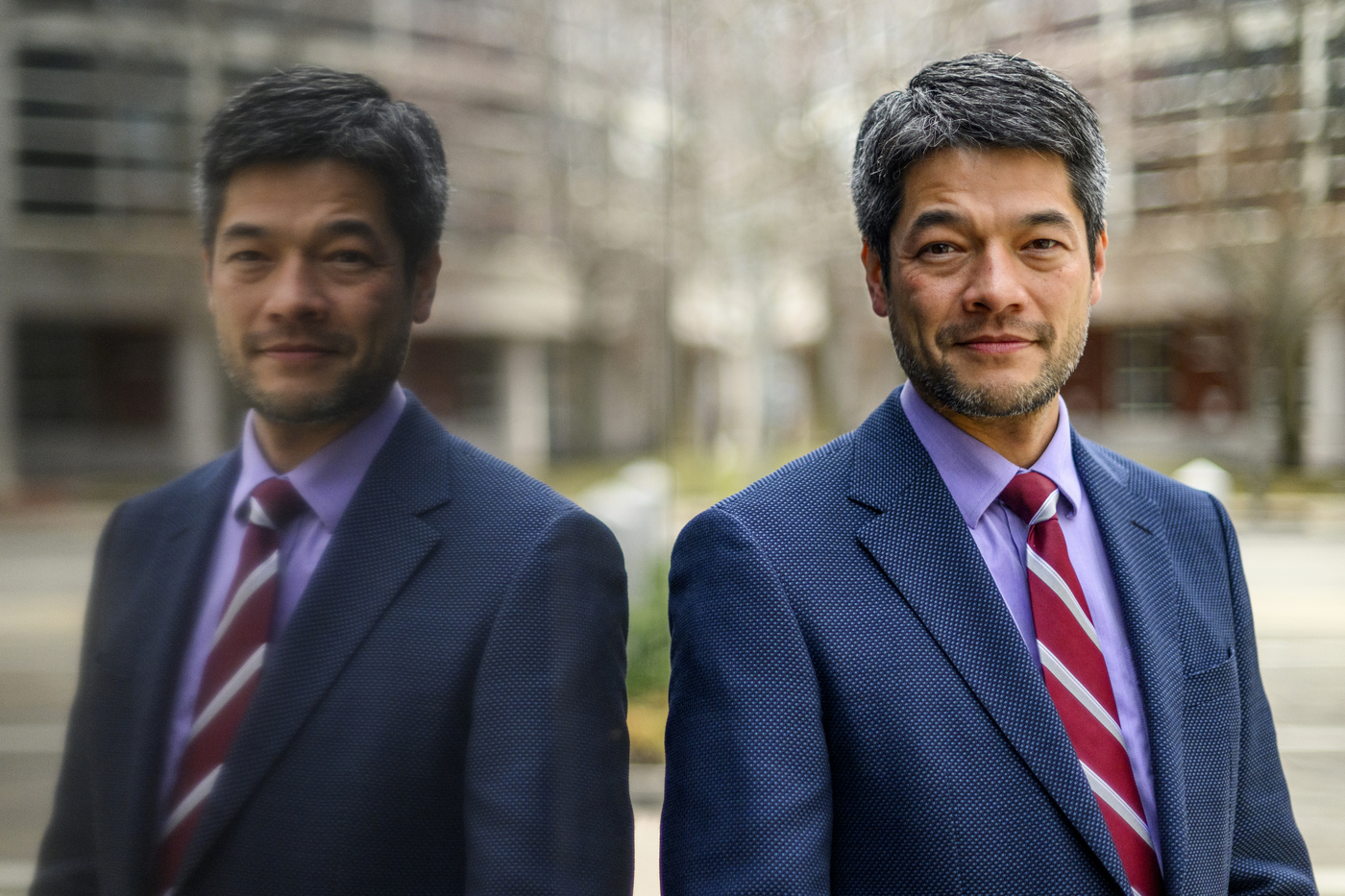

This article originally appeared on Northeastern Global News. It was published by Cody Mello-Klein. Main photo: Matthew Modoono/Northeastern University

Have you ever seen a dark shape out of the corner of your eye and thought it was a person, only to breathe a sigh of relief when you realize it’s a coat rack or another innocuous item in your house? It’s a harmless trick of the eye, but what would happen if that trick was played on something like an autonomous car or a drone?

That question isn’t hypothetical. Kevin Fu, a professor of engineering and computer science who specializes in finding and exploiting new technologies at Northeastern University, figured out how to make the kind of self-driving cars Elon Musk wants to put on the road hallucinate.

Kevin Fu, professor of electrical and computer engineering and computer science. Photo by Matthew Modoono/Northeastern University

By revealing an entirely new kind of cyberattack, an “acoustic adversarial” form of machine learning that Fu and his team have aptly dubbed Poltergeist attacks, Fu hopes to get ahead of the ways hackers could exploit these technologies –– with disastrous consequences.

“There are just so many things we all take for granted,” Fu says. “I’m sure I do and just don’t realize because we abstract things away otherwise, you’ll never be able to walk outside. … The problem with abstraction is it hides things to make engineering tractable, but it hides things and makes these assumptions. There might be a one in a billion chance, but in computer security, the adversary makes that one in a billion happen 100% of the time.”

Poltergeist is about more than just jamming or interfering with technology like some other forms of cyberattacks. Fu says this method creates “false coherent realities,” optical illusions for computers that utilize machine learning to make decisions.

Similar to Fu’s work in extracting audio from still images, Poltergeist exploits the optical image stabilization found in most modern cameras, from smartphones to autonomous cars. This technology is designed to detect the movement and shakiness of the photographer and adjust the lens to ensure photos are not a blurry mess.

“Normally, it’s used to deblur, but because it has a sensor inside of it and those sensors are made of materials, if you hit the acoustic resonant frequency of those materials, just like the opera singer who hits the high note that shatters a wine glass, if you hit the right note, you can cause those sensors to sense false information,” Fu says.

Read full story at Northeastern Global News